ZotSight -- Smart Object Tracking

People do forget stuff. Various objects are put into places like cupboards, bookcases, and refrigerators but never get retrieved because their positions are too far behind the eyesight. Then, things become forgotten and wasted and duplicated items are bought.

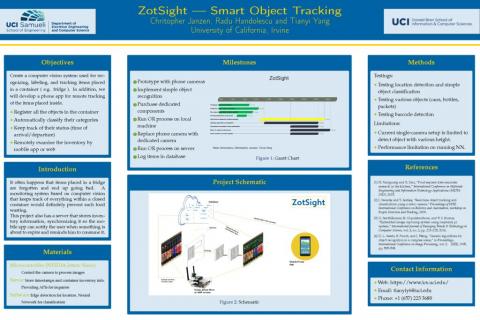

This project consists of a smart object tracking system that can be applied to embedded devices to digitalize containers like shelves and refrigerators. By using a hybrid model of traditional edge-detection algorithm and novel neural network, the system can first record the category of any inbound or outbound object, then update its positional information efficiently. After uploading all information to a remote server, user can then use a mobile application to check the storage anytime with Internet connection.

Currently, the system is deployed on a Nvidia Jetson Nano development board with a IMX 219 camera of 8MP and 160 FOV attaches to it. The Jetson Nano is running with Linux operation system with a custom, self-compiled kernel. Core libraries for computer vision are also recompiled in order to utilize the CUDA cores on the dev board. A hybrid algorithm reads the video stream and processes corresponding positional and categorical data.

The transportation of code from x86_64 to ARM is mostly complete but due to the hardware shipment delay, we are expecting to perform a comprehensive compatibility test in the winter break. Most of the library dependencies are recompiled specifically for Nvidia Jetson in order to utilize the CUDA cores to speed up calculations.

In the future, we are planning to build a remote server to store data that catches by our system and a corresponding mobile app to provide a user-friendly interface for interacting with our system.